Neural networks are computational systems modeled after the human brain. They are designed to recognize patterns and solve complex problems by processing information in a similar way to the neurons in the brain.

Neural networks are a type of machine learning algorithm that consists of interconnected layers of artificial neurons, also known as nodes or perceptrons. These interconnected nodes work together to process and analyze data, allowing the neural network to learn and make predictions.

Analogy to the Human Brain

The structure of neural networks is inspired by the biological structure of the human brain. Just as the brain is composed of interconnected neurons, neural networks consist of layers of interconnected artificial neurons.

Like the brain, neural networks can learn from experience and adapt their behavior. They can recognize patterns, make predictions, and even learn from their mistakes, just as the human brain does.

By mimicking the brain’s structure and functionality, neural networks are able to perform tasks such as image recognition, natural language processing, and data analysis with remarkable accuracy and efficiency.

In the following sections, we will explore the key components of neural networks, including the activation function, weights, biases, and the process of training a neural network. We will also dive into different types of neural networks, such as feedforward neural networks and recurrent neural networks, to gain a deeper understanding of their capabilities and applications.

Let’s embark on a journey to unravel the mysteries of neural networks and discover how these powerful tools are transforming the field of artificial intelligence.

Components of a Neural Network

Neural networks consist of several key components that work together to process and analyze data. Understanding these components is crucial for comprehending how neural networks function and how they can be optimized for various tasks.

- Neurons: Neurons are the fundamental units of a neural network. They receive inputs, perform computations, and produce outputs. Each neuron takes in input values, multiplies them by corresponding weights, and passes them through an activation function to calculate an output. Neurons are interconnected through layers, forming the architecture of the neural network.

- Activation Function: Activation functions determine the output of a neuron based on its inputs. Activation functions introduce non-linearity into the neural network, allowing it to learn and model complex relationships in the data. Common activation functions include the sigmoid function, hyperbolic tangent function, and rectified linear unit (ReLU) function.

def relu(x):

return np.maximum(0, x)

def tanh(x):

return np.tanh(x)

def softmax(x):

exp_x = np.exp(x – np.max(x))

return exp_x / exp_x.sum(axis=0)

# Usage example

x = np.array([-2.0, 3.0, 0.5])

print(“ReLU:”, relu(x))

print(“Tanh:”, tanh(x))

print(“Softmax:”, softmax(x))ReLU: [0. 3. 0.5]

Tanh: [-0.96402758 0.99505475 0.46211716]

Softmax: [0.00618829 0.91842297 0.07538875]

Weights and Biases: Weights and biases are essential parameters in a neural network. Each neuron has associated weights that determine the strength of connections between neurons in different layers. These weights are adjusted during the training process to optimize the network’s performance. Biases are additional parameters that are added to the weighted sum of inputs to introduce flexibility to the network’s decision-making process.

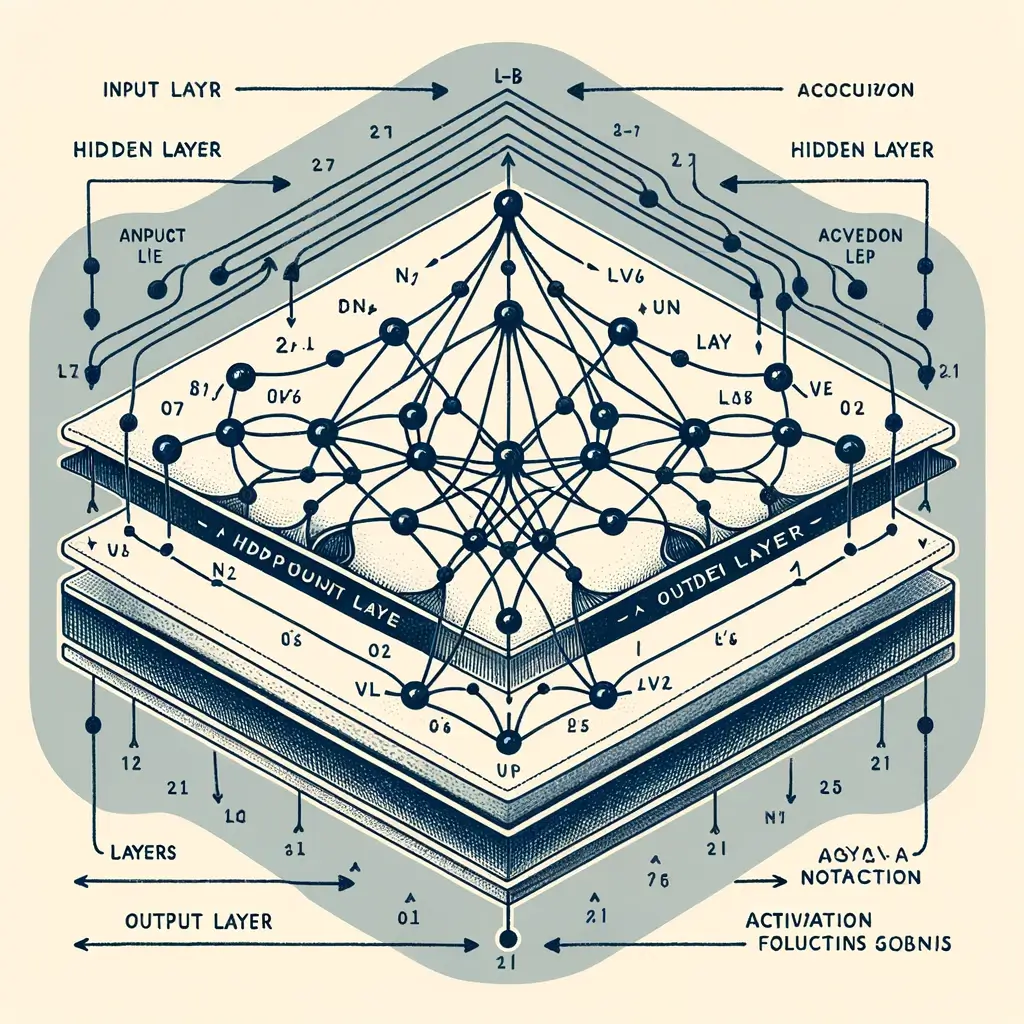

Layers: Neurons in a neural network are organized into layers. Layers represent different levels of abstraction and perform specific computations. The three main types of layers are input layers, hidden layers, and output layers. Input layers receive the initial data, hidden layers process and transform the data, and output layers produce the final results. The depth and arrangement of layers in a neural network impact its ability to learn complex patterns and make accurate predictions.

Understanding the components of a neural network is crucial for designing, training, and optimizing these powerful machine learning models. Each component plays a unique role in processing information and contributes to the network’s overall performance. By carefully configuring these components, researchers and practitioners can build neural networks that excel in various applications.import numpy as np

def sigmoid(x):

return 1 / (1 + np.exp(-x))

# Define a simple neural network with:

# 1 input layer with 3 inputs, 1 hidden layer with 4 neurons, and 1 output layer with 2 neurons

weights_input_to_hidden = np.array([[0.5, -0.6, 0.1],

[0.7, -0.8, 0.2],

[-0.1, 0.9, -0.3],

[0.3, 0.1, -0.4]])

weights_hidden_to_output = np.array([[0.2, -0.1, 0.4, 0.6],

[-0.3, 0.8, -0.5, 0.2]])

bias_hidden = np.array([0.1, -0.2, 0.3, 0.4])

bias_output = np.array([-0.1, 0.2])

def simple_neural_network(input):

hidden_layer_input = np.dot(weights_input_to_hidden, input) + bias_hidden

hidden_layer_output = sigmoid(hidden_layer_input)

output_layer_input = np.dot(weights_hidden_to_output, hidden_layer_output) + bias_output

output = sigmoid(output_layer_input)

return output

# Example input

input = np.array([0.7, -1.5, 0.2])

output = simple_neural_network(input)

print(“Output:”, output)

Output: [0.60530524 0.65013656]

How Neural Networks Learn

Neural networks are powerful machine learning algorithms that can learn and make predictions by adjusting their internal parameters. The learning process involves three key steps:

1. Feedforward Process

During the feedforward process, the neural network takes in input data and transmits it through its interconnected layers of nodes or neurons. Each neuron receives input signals from the previous layer, applies a weighted sum to these inputs, and then passes the result through an activation function. This process continues until the output layer is reached, where the final results or predictions are generated.

2. Backpropagation

Backpropagation is a crucial step in neural network learning, where the network updates its weights in response to the error or difference between the predicted outputs and the expected outputs. The goal is to minimize the error and make the network’s predictions more accurate.

To calculate the error, the network compares its output with the ground truth. It then propagates this error backward through the network, adjusting the weights of the connections between neurons. This backward propagation allows the network to attribute the error proportionally to each neuron based on its contribution to the overall error.def backpropagation(network_output, expected_output, learning_rate=0.01):

# This is a simplified example showing the concept of backpropagation

# Here, we calculate the derivative of the loss function (mean squared error) w.r.t the network output

error = network_output – expected_output

d_loss_d_output = error * sigmoid_derivative(network_output)

# Update weights and biases accordingly (This is a conceptual representation)

# weights -= learning_rate * d_loss_d_output * input

# biases -= learning_rate * d_loss_d_output

pass

def sigmoid_derivative(x):

return x * (1 – x)

# Assuming we have a networknetwork_output = np.array([0.5, 0.8]) # Example network output

expected_output = np.array([0, 1]) # Expected output for the given example

backpropagation(network_output, expected_output)

3. Gradient Descent

Gradient descent is an optimization technique used in the backpropagation process to update the network’s weights. It aims to minimize the error by iteratively adjusting the weights in the direction of steepest descent of the error surface.

During gradient descent, the network calculates the gradient of the error with respect to each weight. This gradient represents the direction and magnitude of change needed to reduce the error. The network then updates the weights by taking small steps proportional to the negative gradient, gradually improving its performance.

By repeating the feedforward process, backpropagation, and gradient descent iteratively, neural networks can learn to make accurate predictions and improve their performance over time.def train_neural_network(inputs, targets, epochs=100, learning_rate=0.01):

# Initialize your neural network here (not shown for brevity)

for epoch in range(epochs):

for input, target in zip(inputs, targets):

# Perform a feedforward pass

output = simple_neural_network(input) # Simplified for illustration

# Compute error and perform backpropagation

backpropagation(output, target, learning_rate)

# Update weights and biases based on the error (not shown for brevity)

# Optionally, print out loss and accuracy here for monitoring

# Example usage:

# Assuming inputs and targets are defined

train_neural_network(inputs, targets)

Types of Neural Networks

Neural networks are a fundamental component of artificial intelligence and machine learning. They mimic the functioning of the human brain and are capable of processing complex data to make predictions or classifications. Here are four common types of neural networks:

- Feedforward Neural Networks: Feedforward neural networks are the most traditional and basic type of neural network. They consist of interconnected nodes or artificial neurons organized in different layers, including an input layer, one or more hidden layers, and an output layer. Information flows in one direction, from the input layer through the hidden layers to the output layer. These networks are primarily used for tasks such as pattern recognition, classification, and regression.

- Recurrent Neural Networks: Recurrent neural networks (RNNs) are designed to handle sequential data, such as time series or natural language processing tasks. Unlike feedforward neural networks, RNNs have cyclic connections, allowing information to flow in loops. This enables RNNs to retain information from previous inputs, making them suitable for tasks that require memory. RNNs are commonly used for speech recognition, language translation, and sentiment analysis.

- Convolutional Neural Networks: Convolutional neural networks (CNNs) are specifically designed for processing and analyzing image and video data. CNNs utilize a unique architecture that consists of convolutional layers, pooling layers, and fully connected layers. Convolutional layers apply filters to input data, extracting features at various levels of abstraction. Pooling layers downsample the feature maps, reducing the network’s spatial dimensions. CNNs have significantly advanced computer vision tasks, including image classification, object detection, and image segmentation.

- Long Short-Term Memory Networks: Long Short-Term Memory (LSTM) networks are a specific type of recurrent neural network that can model long-term dependencies and track information across longer sequences. LSTMs are equipped with memory cells and a set of gates to control the flow of information. These networks have proven to be effective in tasks that involve analyzing sequences of data, such as speech recognition, handwriting recognition, and language modeling.

Each type of neural network has its own strengths and applications. Choosing the appropriate neural network architecture is crucial for achieving optimal performance in specific tasks. By leveraging the capabilities of these networks, researchers and practitioners can tackle a wide range of real-world problems and drive advancements in artificial intelligence and machine learning.

Applications of Neural Networks

Neural networks, a type of machine learning model, have a wide range of applications across various fields. They excel in solving complex problems by mimicking the human brain’s ability to learn and process information. Here are some key applications of neural networks:

1. Image Recognition

Neural networks have revolutionized the field of image recognition. By analyzing large datasets, neural networks can identify objects, patterns, and even faces in images with remarkable accuracy. This has numerous practical applications, including facial recognition systems, object detection in autonomous vehicles, and security surveillance.

2. Natural Language Processing

Another significant application of neural networks is in natural language processing (NLP). Neural networks can analyze and understand human language, enabling machines to comprehend and generate text. This technology powers virtual assistants like Siri and chatbots, facilitating human-like interactions and language translation.

3. Speech Recognition

Neural networks play a vital role in speech recognition applications. They can convert spoken words into written text through a process known as automatic speech recognition. This technology is widely used in voice-controlled systems, transcription services, and virtual assistants.

4. Autonomous Vehicles

Neural networks are at the core of developing self-driving capabilities in autonomous vehicles. By processing vast amounts of sensor data in real-time, neural networks enable vehicles to perceive and interpret their environment accurately. This includes identifying objects, recognizing traffic signs, and anticipating movements, ensuring safe navigation and driving.

In summary, neural networks have revolutionized various industries with their applications in image recognition, natural language processing, speech recognition, and autonomous vehicles. Their ability to learn and make complex decisions has opened up new possibilities for solving real-world problems.

Limitations and Challenges of Neural Networks

Neural networks have gained immense popularity in various fields for their ability to learn and make predictions. However, they are not without their limitations and challenges. Understanding these limitations is crucial for effectively applying neural networks in real-world scenarios. Let’s explore some of the key limitations and challenges associated with neural networks:

Overfitting and Underfitting: Striking the Right Balance

One of the major challenges in training neural networks is finding the right balance between overfitting and underfitting. Overfitting occurs when the model learns to identify patterns in the training data too well, resulting in poor performance on unseen data. On the other hand, underfitting happens when the model fails to capture the underlying patterns in the data and performs poorly on both the training and testing data.

To overcome these challenges, techniques such as regularization, cross-validation, and early stopping can be employed to prevent overfitting and achieve a well-generalized model.

Explainability: Interpreting the Decisions Made by the Network

Neural networks, especially deep learning models, are often referred to as “black boxes” due to their inherent lack of explainability. The decisions made by neural networks are based on complex computations involving numerous interconnected layers and parameters, making it challenging to understand the reasoning behind their predictions.

As neural networks are increasingly being used in critical areas such as healthcare and finance, the lack of interpretability becomes a significant concern. Researchers are actively working on developing methods to interpret and explain the decisions made by neural networks, including techniques such as layer-wise relevance propagation and attention mechanisms.

Training Data Limitations: Impact on Network Performance

The quality and quantity of training data significantly impact the performance of neural networks. Insufficient or biased training data can lead to inaccurate predictions and biased decision-making. Additionally, neural networks are highly dependent on the diversity and representativeness of the training data to learn generalizable patterns.

Collecting and curating large, diverse, and high-quality training datasets can be a resource-intensive task. Insufficient training data can be addressed by techniques like data augmentation and transfer learning. However, addressing biases in the training data requires careful consideration and mitigation strategies to ensure fairness and avoid algorithmic bias.

In summary, while neural networks offer powerful learning and prediction capabilities, they are not without limitations and challenges. Striking the right balance between overfitting and underfitting, interpreting the decisions made by the network, and addressing training data limitations are key areas of focus for researchers and practitioners working with neural networks.

Future of Neural Networks

Neural networks have already made significant strides in various fields, but their future holds even more promise and potential. Here are two key aspects that will shape the future of neural networks:

Advancements in Deep Learning and Neural Network Architectures

Deep learning, a subset of machine learning, focuses on training artificial neural networks to learn and make decisions similar to the human brain. In the future, we can expect advancements in deep learning and neural network architectures that will drive innovation in several areas:

1. More Complex Networks: As researchers delve deeper into network architectures, we can anticipate the development of more complex and sophisticated neural networks. These networks will allow for deeper levels of abstraction, enabling the understanding and processing of even more intricate patterns and structures.

2. Improved Performance: Ongoing research efforts will likely lead to enhancements in network performance, including faster training and inference times. This will enable the deployment of neural networks across a wide range of applications, from real-time decision-making systems to resource-intensive tasks such as video analysis and natural language processing.

3. Transferable Knowledge: The future will witness the transfer of knowledge between different neural network models. This means that knowledge acquired in one domain can be successfully applied to another. This transfer learning capability will accelerate the development of new applications and improve the efficiency of training neural networks.

Ethical Considerations and the Responsible Use of Neural Networks

As neural networks become more powerful and prevalent, it is crucial to address the ethical considerations and promote their responsible use. Here are some key points to consider:

1. Bias and Fairness: Neural networks are only as unbiased as the data they are trained on. Ensuring fairness and mitigating bias in data collection and model development will be essential. Organizations and researchers must actively work towards building more diverse and representative datasets to reduce inherent bias.

2. Privacy and Security: Neural networks often rely on large amounts of personal data. Protecting this data and ensuring user privacy will continue to be a pertinent concern. Stricter regulations and robust security measures must be put in place to safeguard sensitive information and prevent unauthorized access.

3. Accountability and Transparency: As neural networks make crucial decisions in various domains, it becomes necessary to create frameworks that enable transparency and accountability. Understanding how decisions are made by the network and being able to explain the reasoning behind them will help build trust and ensure responsible usage.

4. Human Oversight: While neural networks have the potential to automate decision-making processes, human oversight and intervention must remain integral. Human experts should be actively involved in verifying and validating the outputs of neural networks to prevent potential errors or biases.

In conclusion, the future of neural networks holds immense potential for advancements in deep learning and neural network architectures. However, it is vital to accompany these advancements with ethical considerations and responsible use to address biases, ensure privacy, promote accountability, and maintain human oversight. By embracing these principles, we can shape a future where neural networks positively impact various domains while upholding societal values.

Conclusion: Unlocking the Power of Neural Networks

In conclusion, neural networks have emerged as a powerful tool in various fields, showcasing their ability to learn and make complex predictions. Throughout this section, we have explored the fundamental concepts and practical applications of neural networks, shedding light on their potential to revolutionize industries and solve real-world problems.

From image recognition and natural language processing to autonomous vehicles and medical diagnostics, neural networks have proven their worth in countless domains. By mimicking the structure and function of the human brain, these powerful algorithms have demonstrated remarkable accuracy and versatility.

By leveraging the power of neural networks, businesses and researchers can unlock new opportunities and gain valuable insights from massive amounts of data. These networks have the capability to uncover hidden patterns, make accurate predictions, and automate complex tasks, leading to increased efficiency and innovation.

To fully harness the power of neural networks, further exploration and experimentation are encouraged. As the field of machine learning and artificial intelligence continues to evolve, there is ample room for advancements and discoveries. By staying abreast of the latest research and developments, individuals can stay at the forefront of this exciting field.

In conclusion, neural networks hold immense potential and are poised to drive significant advancements across industries. By embracing this technology and continuing to push the boundaries, we can unlock new possibilities and revolutionize the way we live, work, and interact with the world. So, let us delve deeper into the world of neural networks and uncover the limitless possibilities that lie ahead.

Reference: