Introduction: Understanding Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) play a significant role in the field of machine learning, particularly in processing sequential data. They are designed to handle data that has a temporal or sequential relationship, making them suitable for tasks such as language translation, speech recognition, and time series analysis.

Definition and Purpose of RNNs

At its core, an RNN is a type of artificial neural network that contains loops or cycles in its architecture, allowing it to retain information from previous inputs while processing current inputs. This ability to capture temporal dependencies is what sets RNNs apart from other neural networks.

The main purpose of an RNN is to model sequential data by considering the influence of past inputs on present outputs. This temporal memory allows RNNs to make predictions based on the context provided by past inputs, making them effective for tasks that involve predicting the next element in a sequence.

Overview of RNN Architecture and Functionality

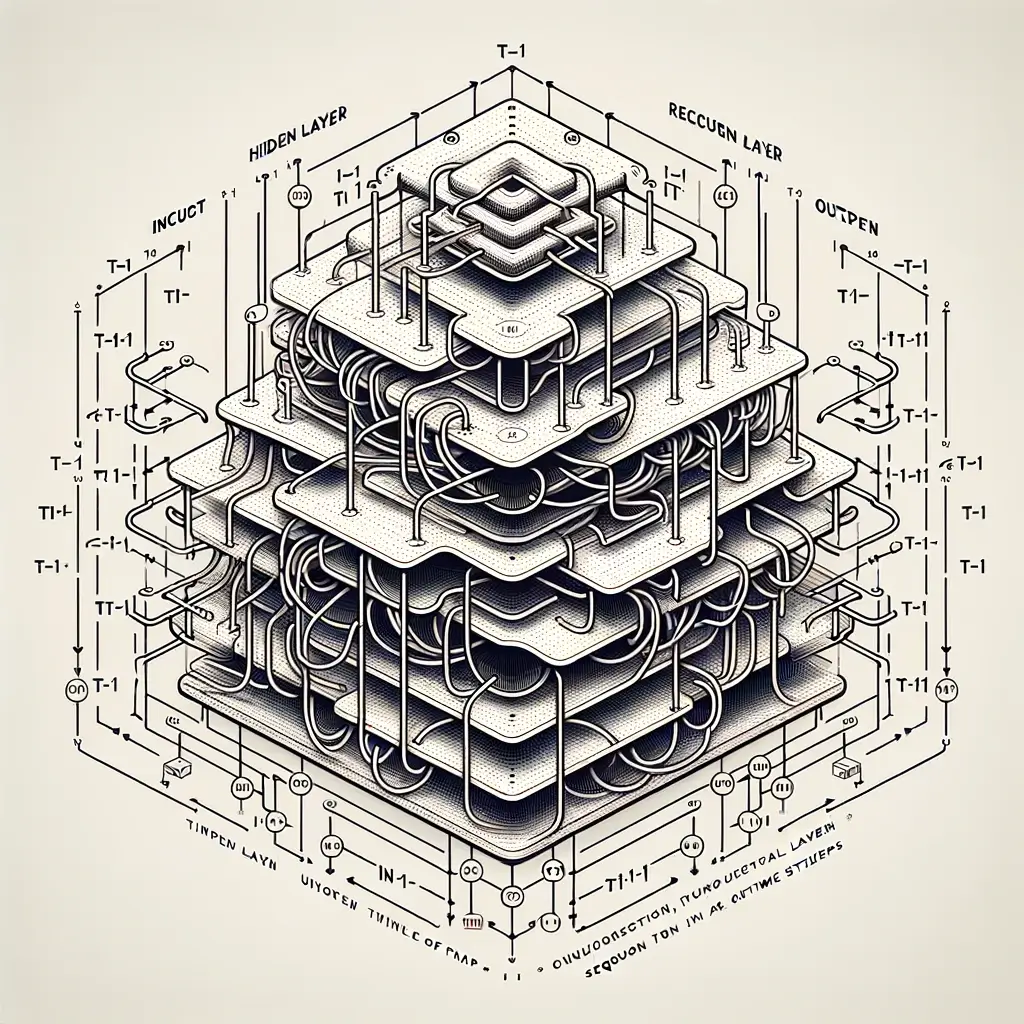

The architecture of an RNN consists of three main components: an input layer, a hidden layer with recurrent connections, and an output layer. The input layer receives sequential data, which is passed through the hidden layer, where the recurrent connections enable information to flow from one step of the sequence to the next.

In terms of functionality, an RNN processes sequential data in a step-by-step manner. At each time step, the RNN takes an input and combines it with the information from the previous time step. This combination is then used to calculate an output and update the internal state of the RNN. This process is repeated for each time step, allowing the RNN to learn patterns and dependencies across the entire sequence.# TensorFlow example of a basic RNN layer

import tensorflow as tf

# Define a simple RNN layer

rnn_layer = tf.keras.layers.SimpleRNN(units=100, activation=’tanh’)

# Input for RNN (batch_size, time_steps, input_features)

inputs = tf.random.normal([32, 10, 8])

# Outputs of the RNN

output = rnn_layer(inputs)

print(“Output Shape:”, output.shape)Output Shape: (32, 100)

Importance of RNNs in Processing Sequential Data

RNNs are particularly important in processing sequential data due to their ability to capture long-term dependencies and context from past inputs. This makes them well-suited for tasks such as natural language processing, where the meaning of a word or phrase is often influenced by the words that precede it.

By considering the entire context of a sequence, RNNs can make more accurate predictions and generate more meaningful outputs. For example, in language translation, an RNN can take into account the words that have already been translated to produce a more coherent and contextually appropriate translation.

In conclusion, RNNs are a powerful tool in the field of machine learning, enabling the modeling of sequential data by capturing temporal dependencies. Their architecture and functionality allow them to retain information from previous inputs, making them essential for tasks that involve processing sequential data and making predictions based on the context provided by past inputs.

Historical Development and Evolution of RNNs

Recurrent Neural Networks (RNNs) have a rich history of development and evolution. This section delves into the origins of RNNs, the challenges faced by early models, and the key breakthroughs that have shaped RNN research.

1. Origins and early models of RNNs

The concept of RNNs can be traced back to the 1980s. The pioneering work of Paul Werbos in 1982 laid the foundation for RNNs by introducing the idea of backpropagation through time. This allowed the networks to learn from sequential input data by propagating errors backward through time.

Early RNN models, such as Elman networks and Jordan networks, were developed in the late 1980s and early 1990s. Elman networks introduced a simple recurrent hidden layer, while Jordan networks utilized a separate context layer. These early models demonstrated the potential of RNNs in processing sequential data.

2. Challenges and limitations faced by early RNN architectures

Early RNN architectures faced several challenges and limitations that hindered their effectiveness in handling long-term dependencies. The vanishing gradient problem was one major issue, where the gradients used for learning diminished exponentially over time. This limitation made it difficult for RNNs to capture and retain information from earlier time steps.

Another challenge was the lack of memory capacity. RNNs had limited memory due to the fixed-size hidden states. This limitation made it challenging for RNNs to effectively model long sequences.

3. Key breakthroughs and advancements in RNN research

Significant breakthroughs in RNN research have paved the way for improved performance and capabilities. Some notable advancements include:

Long Short-Term Memory (LSTM): LSTM, introduced by Hochreiter and Schmidhuber in 1997, addressed the vanishing gradient problem. LSTM introduced a more sophisticated memory cell structure, allowing the network to retain and propagate information over longer time durations. This innovation greatly enhanced the ability of RNNs to capture long-term dependencies.

Gated Recurrent Unit (GRU): A simplified variant of LSTM, GRU was proposed by Cho et al. in 2014. GRU introduced a gating mechanism that combined the forget and input gates of LSTM into a single update gate. This reduced the complexity of the model while still achieving comparable performance to LSTM.

Bidirectional RNNs: To capture both past and future context information, bidirectional RNNs were introduced. They combine two separate RNNs: one processing the sequence in the forward direction and another processing it in the reverse direction. This allows the model to capture contextual information from both directions, enhancing its predictive capabilities.

Attention Mechanism: The attention mechanism, introduced in 2014 by Bahdanau et al., revolutionized the field of natural language processing and sequence modeling. It allows the model to selectively focus on different parts of the input sequence, enabling more accurate predictions. Attention mechanisms have become a standard component in various sequence-to-sequence tasks.

These breakthroughs and advancements in RNN research have significantly improved the performance and capabilities of RNN models. Researchers continue to explore novel architectures and techniques to further enhance RNNs and their applications in various domains. import tensorflow as tf

# Define an LSTM layer

lstm_layer = tf.keras.layers.LSTM(units=100, return_sequences=True)

# Define a GRU layer

gru_layer = tf.keras.layers.GRU(units=100, return_sequences=True)

# Example input (batch_size, time_steps, input_features)

inputs = tf.random.normal([32, 10, 8])

# Outputs of the LSTM and GRU

lstm_output = lstm_layer(inputs)

gru_output = gru_layer(inputs)

print(“LSTM Output Shape:”, lstm_output.shape)

print(“GRU Output Shape:”, gru_output.shape)

Key Features and Advantages of RNNs

Recurrent Neural Networks (RNNs) possess several key features and advantages that make them a powerful tool for processing sequential data with variable lengths. These features enable RNNs to excel in various domains, including natural language processing and speech recognition. Let’s explore some of the notable features and advantages of RNNs:

- Handling Sequential Data: RNNs are specifically designed to handle sequential data, such as time series or sentences. Unlike traditional neural networks, which process data independently, RNNs have a built-in ability to consider the order and dependencies of data points in a sequence. This makes them particularly suitable for tasks that involve analyzing and predicting patterns in sequential data.

- Long-term Dependencies: RNNs are imbued with memory or hidden states, allowing them to process and retain information over time. This feature enables RNNs to capture and analyze long-term dependencies, which is crucial in tasks that depend on historical context. For example, in natural language processing, RNNs can understand the meaning of a word in a sentence based on the previous words’ context.

- Versatility: RNNs have versatile applications across various domains. In natural language processing, RNNs can be used for tasks like language translation, sentiment analysis, and text generation. In speech recognition, RNNs can process audio data and convert it into text. Additionally, RNNs find applications in image captioning, stock market prediction, recommendation systems, and many other fields that involve sequential data analysis.

- Flexibility with Variable Lengths: Unlike traditional neural networks, RNNs can handle input sequences of variable lengths. This flexibility enables RNNs to process texts or time series data that have different lengths, making them adaptable to real-world scenarios where data lengths may vary.

- Training on Partial Sequences: RNNs can learn from and make predictions based on partial sequences of data. This is particularly useful when working with sequential data that comes in chunks or streaming data where the full sequence is not yet available.

By leveraging the ability to process sequential data with variable lengths, retaining memory over time, and their versatility in different domains, RNNs have proven to be a valuable tool for numerous applications. From natural language processing to speech recognition, RNNs offer powerful capabilities for analyzing, understanding, and generating sequential data.# TensorFlow example for padding sequences

import tensorflow as tf

# Example sequences of variable lengths

sequences = [

[1, 2, 3],

[4, 5],

[6]

]

# Pad sequences for uniform length

padded_sequences = tf.keras.preprocessing.sequence.pad_sequences(sequences, padding=’post’)

print(“Padded Sequences:”)

print(padded_sequences)

Types of RNNs and Architectural Variations

Recurrent Neural Networks (RNNs) are a class of artificial neural networks specifically designed for sequential data processing. They have demonstrated remarkable success in various fields, including natural language processing, speech recognition, and time series analysis. Let’s explore some of the different types of RNNs and architectural variations:

Vanilla RNN:

The vanilla RNN, also known as the Simple RNN, is the most basic form of RNN. It has a simple structure where each neuron takes input from the previous time step and produces an output for the current time step. However, vanilla RNNs often suffer from the vanishing gradient problem, where the gradients diminish as they propagate back in time, making it challenging to capture long-term dependencies.

Long Short-Term Memory (LSTM) Networks:

LSTM networks are a type of RNN that overcome the vanishing gradient problem. They introduce memory cells and additional gates to selectively remember or forget information over multiple time steps. With the use of input, output, and forget gates, LSTM networks can retain important information for longer periods, thus improving long-term dependencies modeling.

Gated Recurrent Unit (GRU) Networks:

GRU networks are similar to LSTM networks and address the vanishing gradient problem as well. They combine the forget and input gates of LSTM networks into a single “update gate” and merge the cell state and hidden state. GRUs are simpler and computationally less expensive than LSTM networks while still performing well on various tasks.

Bidirectional RNNs:

Bidirectional RNNs incorporate information from both past and future time steps, enhancing the context captured by the model. In this architecture, the hidden layer is split into two separate layers: one that processes the sequence in the forward direction and another that processes it in the backward direction. Bidirectional RNNs are particularly useful when the current output depends on both past and future inputs.

These different types and variations of RNN architectures offer diverse capabilities in capturing sequential dependencies and have their strengths and weaknesses. Researchers and practitioners often choose a specific type or variation according to the requirements of the task at hand.

Real-World Applications of RNNs

Recurrent Neural Networks (RNNs) have found numerous practical applications across different domains. Let’s explore some of the key areas where RNNs are widely used:

- Natural Language Processing (NLP): RNNs have made significant contributions to NLP tasks such as language translation and sentiment analysis. With their ability to capture sequential dependencies, RNNs can effectively translate text from one language to another. Additionally, RNNs can analyze the sentiment of a piece of text, providing valuable insights for sentiment analysis tasks.

- Speech Recognition and Generation: RNNs have played a crucial role in improving speech recognition systems. By processing sequential audio data, RNNs can recognize speech patterns, leading to more accurate and reliable speech recognition results. Furthermore, RNNs can also be employed to generate speech, enabling applications like text-to-speech synthesis and voice assistants.

import librosa

import numpy as np

# Load an audio file

audio_file = librosa.load(‘path/to/audio.wav’, sr=16000)

# Extract MFCC features

mfccs = librosa.feature.mfcc(audio_file[0], sr=16000, n_mfcc=40)

# Example of preprocessing for RNN input

mfccs_processed = np.mean(mfccs.T,axis=0)

print(“Processed MFCCs:”, mfccs_processed.shape)

- Time Series Analysis: RNNs excel in analyzing time-dependent data, making them an excellent choice for time series analysis tasks. In finance, RNNs are commonly used for forecasting future stock prices, predicting market trends, and detecting anomalies. By modeling the temporal dependencies in financial data, RNNs can provide valuable insights for investment decision-making.

- Image and Video Captioning: RNNs have proven instrumental in generating captions for images and videos. By processing the visual content in a sequential manner, RNNs can generate captions that not only describe the visual elements but also provide contextual understanding. This is especially useful in applications like automated image captioning, where RNNs can generate accurate and meaningful descriptions of images.

In summary, RNNs have demonstrated their versatility and effectiveness in various real-world applications. Their ability to capture sequential dependencies makes them particularly useful in tasks involving natural language processing, speech analysis, time series analysis, and visual content understanding. By leveraging the power of RNNs, businesses and researchers can unlock new possibilities and improve their performance in these domains.

Challenges and Limitations of RNNs

Recurrent Neural Networks (RNNs) have proven to be powerful models for various sequential data tasks, but they also come with their own set of challenges and limitations. Understanding these challenges is crucial for effectively implementing and utilizing RNNs in practice.

Vanishing and Exploding Gradient Problems

One of the major challenges with training RNNs is the issue of vanishing and exploding gradients. During the backpropagation process, gradients can either become extremely small (vanish) or grow exponentially (explode) as they propagate through the recurrent layers. This phenomenon can result in unstable training and hinder the learning process.

To address these issues, various techniques have been developed, such as gradient clipping, which involves rescaling the gradients to prevent them from becoming too large. Additionally, using specific activation functions, like the Rectified Linear Unit (ReLU), can help alleviate the vanishing gradient problem by ensuring that the gradients do not diminish too quickly.

Difficulty in Capturing Long-Term Dependencies

Another limitation of RNNs is their inherent difficulty in capturing long-term dependencies in sequential data. While RNNs can theoretically maintain information from the past, the practical ability to retain and utilize relevant information diminishes over longer sequences. This limitation is known as the “short-term memory problem” in RNNs.

To address this issue, researchers have developed different types of RNN architectures, such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU), which incorporate specialized memory cells and gating mechanisms. These architectures allow RNNs to selectively store and access relevant information over longer sequences, enabling the capture of more intricate dependencies.

Computational Complexity and Training Time Requirements

RNNs can be computationally intensive, especially when dealing with large amounts of sequential data. The sequential nature of the computations in RNNs makes them inherently slow compared to other types of neural networks. As a result, training RNNs on extensive datasets or complex tasks can be time-consuming and resource-intensive.

To mitigate these challenges, various strategies can be employed. One approach is to use mini-batch training, where multiple sequences are processed concurrently, reducing the training time per iteration. Another technique is to use parallelism and distributed computing to exploit the computational power of multiple processors or GPUs. Additionally, advancements in hardware, such as specialized neural network accelerators, can significantly improve the computational efficiency of RNN training.

In conclusion, while RNNs offer great potential for modeling sequential data, they also present challenges and limitations. The issues of vanishing and exploding gradients, capturing long-term dependencies, and the computational complexity of training are crucial factors to consider when working with RNNs. Understanding these challenges can help researchers and practitioners make informed decisions when designing and training RNN models.

Future Directions and Trends in RNN Research

Recurrent Neural Networks (RNNs) have been a popular choice for sequential and time-series data analysis, but the field of RNN research is continually evolving with new advancements and potential applications. Here are some future directions and trends in RNN research:

Advancements in RNN architecture

Researchers are constantly exploring new architectures to enhance the capabilities of RNNs. One notable advancement is the emergence of Transformer-based models. Transformers utilize attention mechanisms to capture relationships between different time steps, enabling long-range dependencies and improved performance in tasks like machine translation and language modeling. The application of Transformer-based architectures in RNN research shows great promise in improving the representation and understanding of sequential data.

Integration of RNNs with other deep learning techniques

Integrating RNNs with other deep learning techniques, such as attention mechanisms, has become an active area of research. Attention mechanisms allow RNNs to focus on specific parts of the input sequence when making predictions, improving the model’s ability to capture relevant information. This integration enhances the expressive power and performance of RNNs in tasks like machine translation, image captioning, and speech recognition. Researchers are exploring various attention mechanisms and their synergistic effects with RNNs to create more powerful models for sequential data analysis.

Potential applications in healthcare, robotics, and autonomous systems

RNNs have shown immense potential in various domains, and future research aims to leverage their capabilities in healthcare, robotics, and autonomous systems. In healthcare, RNNs can be applied to tasks like disease prediction, patient monitoring, and personalized treatment recommendation. RNNs can model temporal dependencies and make predictions based on historical patient data, aiding in early detection and intervention.

In robotics and autonomous systems, RNNs can be utilized for tasks like motion planning, control, and decision-making. RNNs can capture the dynamics of the environment and make predictions based on past observations, enabling more robust and adaptive systems.

Overall, the future of RNN research is focused on advancing architecture, integrating with other deep learning techniques, and exploring novel applications in fields like healthcare, robotics, and autonomous systems. These advancements have the potential to revolutionize various industries and lead to significant improvements in data analysis and decision-making.

Conclusion: Unleashing the Power of Recurrent Neural Networks

In conclusion, Recurrent Neural Networks (RNNs) have proven to be a powerful tool in various fields, showcasing their ability to process sequential data and capture temporal dependencies. Throughout this article, we have explored the key insights and applications of RNNs, highlighting their significance in natural language processing, speech recognition, and time series analysis.

RNNs excel in tasks such as language modeling, machine translation, sentiment analysis, and text generation, where the understanding of context and sequential information is crucial. By utilizing recurrent connections, RNNs possess the ability to remember a sequence of information, enabling them to capture long-term dependencies that other neural network architectures struggle with.

Moreover, the development of advanced variants such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) addresses the vanishing gradient problem and improves the learning and memory capabilities of RNNs. These advancements have paved the way for breakthroughs in language understanding, speech recognition accuracy, and time series forecasting accuracy.

As the field of RNN research continues to evolve, there are still numerous areas that warrant further exploration and development. For instance, improving the training efficiency of RNNs, handling long-term dependencies more effectively, and integrating RNNs with other architectures like convolutional neural networks (CNNs) and transformers are exciting avenues for future research.

In conclusion, RNNs have unleashed a new era of possibilities in the realm of sequential data processing. Their ability to capture long-term dependencies and process temporal information has revolutionized various applications across different industries. As researchers and practitioners delve deeper into the potential of RNNs, we can expect even more groundbreaking advancements, leading to enhanced performance and applications in the future. It is an exciting time in the field of RNN research, and the journey to unlock their full potential has just begun.

References:

[1] P. Werbos, “The roots of backpropagation: From ordered derivatives to neural networks and political forecasting.” Wiley Interdisciplinary Reviews: Cognitive Science, 2017.

[2] Y. Bengio, P. Simard, and P. Frasconi, “Learning long-term dependencies with gradient descent is difficult.” IEEE Transactions on Neural Networks, 1994.

[3] S. Hochreiter and J. Schmidhuber, “Long Short-Term Memory.” Neural Computation, 1997.

[4] K. Cho et al., “Learning phrase representations using RNN encoder-decoder for statistical machine translation.” EMNLP, 2014.

[5] D. Bahdanau et al., “Neural machine translation by jointly learning to align and translate.” ICLR, 2015.