Python has emerged as one of the most popular programming languages in the field of data science. Its simplicity, versatility, and extensive library support make it the preferred choice among data scientists and analysts. Python libraries play a crucial role in enhancing functionality and efficiency, enabling professionals to perform complex data analysis tasks with ease.

Python in Data Science: Python offers a wide range of tools and functionalities that cater specifically to the needs of data scientists. Its intuitive syntax and readability make it an ideal language for data manipulation, analysis, and visualization. Python’s extensive support for scientific computing, machine learning, and statistical modeling further solidify its position as the go-to language for data science projects.

Importance of Libraries: Python libraries are pre-written code modules that provide a collection of functions and methods to accomplish specific tasks. Integrating these libraries into data science projects saves time and effort by providing ready-made implementations of common algorithms and data structures. These libraries not only enhance functionality but also significantly improve the execution speed, making them indispensable for data scientists.

Functionality Enhancement: Python libraries, such as NumPy, Pandas, and SciPy, offer a range of functionalities for data manipulation, preprocessing, and analysis. These libraries provide efficient data structures and algorithms, enabling data scientists to handle large datasets effortlessly. Additionally, libraries like Matplotlib and Seaborn facilitate data visualization, allowing professionals to gain insights from complex data through intuitive visual representations.

Efficiency Improvement: Python libraries are often written in lower-level languages, such as C or C++, and provide optimized implementations of algorithms. By utilizing these libraries, data scientists can leverage the performance benefits of these underlying languages while working in a high-level and user-friendly Python environment. This integration of efficient, low-level code with the flexibility and simplicity of Python significantly improves the overall execution speed of data science tasks.

In conclusion, Python libraries play a pivotal role in the field of data science. By harnessing the power of these libraries, data scientists can enhance functionality, improve efficiency, and streamline their data analysis workflows. The extensive availability and versatility of Python libraries empower professionals to tackle complex data science challenges with ease, making Python the language of choice for data scientists worldwide.

Exploratory Data Analysis (EDA) Libraries

To perform effective exploratory data analysis (EDA), it is crucial to leverage the power of various libraries that specialize in data manipulation, analysis, mathematical operations, array processing, and data visualization. Here are some widely-used EDA libraries:

Pandas:

Description: Pandas is a versatile library that provides highly efficient data structures and data analysis tools. It is built on top of NumPy and offers easy-to-use data manipulation and analysis capabilities.

Key Features:

- It provides data structures like DataFrames and Series, which allow for easy indexing, slicing, and reshaping of data.

- Pandas offers a wide range of functions for data cleaning, data wrangling, transforming, merging, and aggregating datasets.

- It supports handling missing values, handling time series data, and effectively integrating data from different sources.

- Pandas is highly compatible with other libraries and tools, making it an essential part of any EDA workflow.

Reference: Pandas Documentation

import pandas as pd

# Creating a DataFrame from a dictionary

data = {'Name': ['John', 'Anna', 'Peter', 'Linda'],

'Age': [28, 34, 29, 42],

'City': ['New York', 'Paris', 'Berlin', 'London']}

df = pd.DataFrame(data)

# Display the DataFrame

print(df)

# Basic data manipulation - filtering

adults = df[df['Age'] > 30]

print("\nAdults:\n", adults)Name Age City 0 John 28 New York 1 Anna 34 Paris 2 Peter 29 Berlin 3 Linda 42 London Adults: Name Age City 1 Anna 34 Paris 3 Linda 42 London

NumPy:

Description: NumPy (Numerical Python) is a fundamental library for scientific computing in Python. It provides support for large, multi-dimensional arrays and matrices, along with an extensive collection of mathematical functions to operate on these arrays.

Key Features:

- NumPy arrays offer fast and efficient data storage and manipulation, making it ideal for handling large datasets.

- It includes a comprehensive collection of mathematical functions for data computation, such as statistics, linear algebra, Fourier transforms, and random number generation.

- NumPy arrays can be easily integrated with other libraries for seamless data processing and analysis.

Reference: NumPy Documentation

import numpy as np

# Creating a NumPy array

arr = np.array([[1, 2, 3], [4, 5, 6]])

# Performing mathematical operations

print("Original array:\n", arr)

print("\nArray after adding 5:\n", arr + 5)

print("\nMean of all elements:", np.mean(arr))Original array: [[1 2 3] [4 5 6]] Array after adding 5: [[ 6 7 8] [ 9 10 11]] Mean of all elements: 3.5

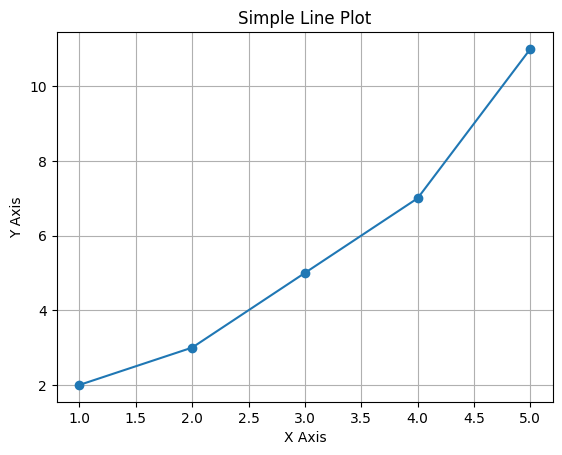

Matplotlib:

Description: Matplotlib is a widely-used data visualization library that provides a flexible and comprehensive framework for creating static, animated, and interactive plots in Python.

Key Features:

- Matplotlib offers a wide variety of plots, including line plots, scatter plots, bar plots, histograms, and more.

- It provides extensive customization options for visual elements, such as colors, markers, linestyles, text labels, and annotations.

- Matplotlib supports interactive features like zooming, panning, and saving plots in various image formats.

- It seamlessly integrates with other libraries like NumPy and Pandas, enabling easy data visualization from arrays and data frames.

Reference: Matplotlib Documentation

# Insert this snippet in the Matplotlib section of your article.

import matplotlib.pyplot as plt

x = [1, 2, 3, 4, 5]

y = [2, 3, 5, 7, 11]

plt.plot(x, y, marker='o')

plt.title('Simple Line Plot')

plt.xlabel('X Axis')

plt.ylabel('Y Axis')

plt.grid(True)

plt.show()

By utilizing these EDA libraries, data scientists and analysts can effectively manipulate, analyze, and visualize data, gaining valuable insights and making informed decisions.

Machine Learning Libraries

When it comes to machine learning, there are several powerful libraries that provide a wide range of tools and frameworks to streamline the development process. Here are three popular machine learning libraries:

Scikit-learn: Considered one of the most comprehensive machine learning libraries, Scikit-learn offers a wide range of algorithms for tasks such as classification, regression, clustering, and dimensionality reduction. It also provides tools for data preprocessing, model selection, and evaluation. With a simple and intuitive interface, Scikit-learn is widely used for both academic research and industrial applications.# Insert this snippet in the Scikit-learn section of your article.

from sklearn.datasets import load_iris

from sklearn.tree import DecisionTreeClassifier

# Load iris dataset

iris = load_iris()

X, y = iris.data, iris.target

# Create and train a decision tree classifier

clf = DecisionTreeClassifier()

clf.fit(X, y)

# Predict the class of a new sample

sample = [[5, 3.6, 1.4, 0.2]]

prediction = clf.predict(sample)

print("Predicted class:", iris.target_names[prediction][0])Predicted class: setosa

TensorFlow: Developed by Google, TensorFlow is a highly popular deep learning framework that focuses on building and training neural networks. It provides a flexible architecture that allows users to create complex models with ease. TensorFlow offers a range of tools and APIs, including Keras, for deep learning tasks such as image recognition, natural language processing, and speech recognition. Its ability to efficiently utilize both CPUs and GPUs makes it a favorite among researchers and developers.

Keras: Keras is a high-level neural networks API that runs on top of TensorFlow, allowing users to build neural networks with minimal code and effort. With Keras, developers can quickly prototype and iterate through different architectures and hyperparameters. It offers a user-friendly and intuitive interface, making it an excellent choice for beginners. Keras also supports various neural network architectures, including convolutional neural networks (CNNs), recurrent neural networks (RNNs), and transformers.# Insert this snippet in the TensorFlow and Keras section of your article.

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

# Define a simple sequential model

def create_model():

model = Sequential([

Dense(32, activation='relu', input_shape=(784,)),

Dense(10, activation='softmax')

])

return model

# Create a model instance

model = create_model()

# Compile the model

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Model summary

model.summary()Model: “sequential” _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= dense (Dense) (None, 32) 25120 dense_1 (Dense) (None, 10) 330 ================================================================= Total params: 25450 (99.41 KB) Trainable params: 25450 (99.41 KB) Non-trainable params: 0 (0.00 Byte) _________________________________________________________________

By leveraging these machine learning libraries, developers can accelerate the implementation and training of models, making it easier to harness the power of machine learning and create intelligent solutions.

Natural Language Processing (NLP) Libraries

When it comes to Natural Language Processing (NLP), there are several powerful libraries available that assist in text mining, processing, and analysis. Two notable NLP libraries are NLTK (Natural Language Toolkit) and SpaCy.

- NLTK (Natural Language Toolkit): NLTK is a comprehensive NLP library widely used for text mining and text processing tasks. It provides a wide range of tools and resources for tasks such as tokenization, stemming, lemmatization, part-of-speech tagging, parsing, and sentiment analysis. NLTK is written in Python and offers a user-friendly interface and extensive documentation, making it an excellent choice for beginners in NLP. With its vast collection of corpora and pre-trained models, NLTK enables researchers to explore various NLP techniques and build their own NLP applications.

# Insert this snippet in the NLTK section of your article.

import nltk

from nltk.tokenize import word_tokenize

nltk.download('punkt')

text = "Natural language processing with NLTK is fun and educational."

tokens = word_tokenize(text)

print("Tokens:", tokens)Tokens: [‘Natural’, ‘language’, ‘processing’, ‘with’, ‘NLTK’, ‘is’, ‘fun’, ‘and’, ‘educational’, ‘.’]

SpaCy: SpaCy is an industrial-strength NLP library designed for efficient and scalable NLP processing. It is known for its speed, accuracy, and ease of use. SpaCy provides robust tools for tasks such as tokenization, part-of-speech tagging, named entity recognition, dependency parsing, and entity linking. It is built in Python and offers pre-trained models and word vectors for multiple languages. SpaCy also supports custom model training, allowing developers to fine-tune models for specific NLP tasks or domains. With its focus on performance and production use, SpaCy is widely adopted by industry professionals.

# Insert this snippet in the SpaCy section of your article.

import spacy

nlp = spacy.load("en_core_web_sm")

text = "Apple is looking at buying U.K. startup for $1 billion"

doc = nlp(text)

for entity in doc.ents:

print(entity.text, entity.label_)Apple ORG U.K. GPE $1 billion MONEY

These NLP libraries are invaluable resources for researchers, developers, and data scientists working on text mining, text processing, and NLP-related tasks. They simplify complex NLP operations and provide efficient solutions for a wide range of natural language processing challenges. Whether you are a beginner or an experienced professional, NLTK and SpaCy offer the necessary tools to develop powerful NLP applications.

Data Visualization Libraries

When it comes to visualizing data, there are various libraries available that offer different features and functionalities. Here are two popular data visualization libraries:

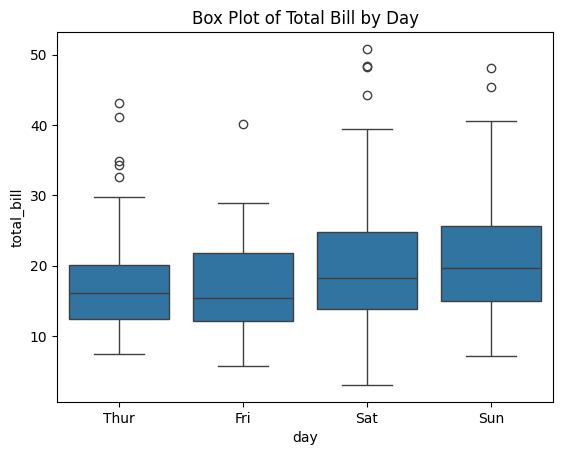

Seaborn: Seaborn is a powerful Python library built on top of Matplotlib. It provides a high-level interface for creating statistical graphics. Seaborn offers a wide range of visually appealing and informative visualizations, making it an excellent choice for exploratory data analysis.

Some key features of Seaborn include:

- Statistical Plotting: Seaborn provides a variety of statistical plots, including distribution plots, regression plots, categorical plots, and more. These plots help in understanding the data’s underlying patterns and relationships.

- Color Palettes: Seaborn offers a wide range of color palettes to choose from, making it easy to customize and enhance the visual appearance of plots.

- Default Aesthetics: Seaborn comes with well-designed default settings, resulting in visually pleasing plots without requiring much customization.

# Insert this snippet in the Seaborn section of your article.

import seaborn as sns

import matplotlib.pyplot as plt

# Load the example tips dataset

tips = sns.load_dataset("tips")

# Create a box plot showing the distribution of total bill amounts by day

sns.boxplot(x="day", y="total_bill", data=tips)

plt.title('Box Plot of Total Bill by Day')

plt.show()

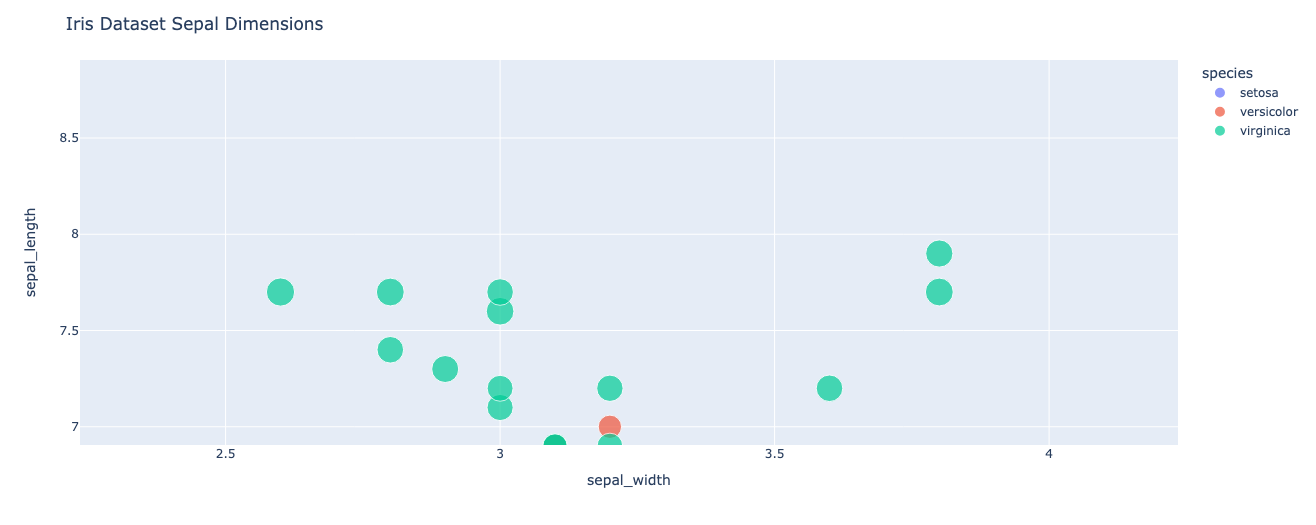

Plotly: Plotly is an interactive and dynamic data visualization library that supports both Python and JavaScript. It offers a range of visualization types and allows users to create interactive plots and dashboards.

Some key features of Plotly include:

- Interactive Visualizations: Plotly provides interactive functionality, allowing users to explore and interact with data directly on the plots. This includes zooming, panning, hovering, and more.

- Web-based Dashboards: With Plotly, you can create web-based dashboards that can be easily shared and accessed online. This is particularly useful for creating real-time visualizations or collaborative data analysis.

- Wide Range of Chart Types: Plotly supports various chart types, such as line charts, scatter plots, bar charts, pie charts, and more. This versatility enables users to choose the most suitable visualization for their data.

# Insert this snippet in the Plotly section of your article.

import plotly.express as px

# Load the iris dataset from Plotly express

df = px.data.iris()

# Create a scatter plot

fig = px.scatter(df, x="sepal_width", y="sepal_length", color="species",

size='petal_length', hover_data=['petal_width'])

fig.update_layout(title='Iris Dataset Sepal Dimensions')

fig.show()

Both Seaborn and Plotly are powerful libraries that cater to different visualization needs. While Seaborn focuses on statistical data visualization, Plotly offers interactive and dynamic features. Depending on your requirements, you can choose the library that best meets your needs and preferences.

Conclusion: Embracing the Power of Python Libraries in Data Science

In this article, we delved into the world of data science and explored the transformative power of Python libraries. We discussed how these libraries are essential tools for data scientists, enabling them to analyze and manipulate data efficiently. Let’s recap the key Python libraries covered in this article:

- NumPy: This library provides support for large, multidimensional arrays and matrices, along with mathematical functions to perform operations on them. NumPy is the foundation for scientific computing in Python, making it a crucial library for data scientists.

- Pandas: Pandas is a powerful library for data manipulation and analysis. It offers data structures like dataframes, which facilitate data cleaning, transformation, and exploration. With Pandas, data scientists can efficiently handle large datasets.

- Matplotlib: Matplotlib is a popular library for data visualization. It provides a wide range of plotting functionalities, allowing data scientists to create informative and visually appealing graphs, charts, and histograms.

- Scikit-learn: Scikit-learn is a machine learning library that offers various algorithms and tools for classification, regression, clustering, and more. It simplifies the implementation of machine learning models and provides built-in functions for model evaluation and selection.

- TensorFlow: TensorFlow is an open-source library for machine learning and deep learning. It enables data scientists to design and train complex neural networks, making it a vital tool for tasks like image recognition, natural language processing, and recommendation systems.

- Keras: Keras is a high-level neural networks API that runs on top of TensorFlow. It provides a user-friendly interface for building and training neural networks, making it accessible to both beginners and experts in deep learning.

- Seaborn: Statistical Data Visualization Seaborn is a library for making statistical graphics in Python. It is built on top of Matplotlib and closely integrated with pandas data structures. Seaborn offers an attractive interface for drawing informative and attractive statistical graphics.

- Plotly: Interactive Data Visualization Plotly’s Python graphing library makes interactive, publication-quality graphs online. Examples of plots include line plots, scatter plots, area charts, bar charts, error bars, box plots, histograms, heatmaps, subplots, multiple-axes, polar charts, and bubble charts.

- NLTK: Natural Language Processing NLTK (Natural Language Toolkit) is a leading platform for building Python programs to work with human language data. It provides easy-to-use interfaces to over 50 corpora and lexical resources such as WordNet, along with a suite of text processing libraries for classification, tokenization, stemming, tagging, parsing, and semantic reasoning.

- SpaCy: Industrial-Strength Natural Language Processing SpaCy is designed specifically for production use and helps you build applications that process and “understand” large volumes of text. It can be used to build information extraction or natural language understanding systems, or to pre-process text for deep learning.

Continuous learning and exploration are crucial in the field of data science. As technologies evolve and new libraries emerge, staying updated and expanding knowledge is essential for data scientists to remain competitive. By continuously exploring new Python libraries and learning how to effectively utilize their capabilities, data scientists can unlock new possibilities and enhance their skillsets.

In conclusion, Python libraries play a pivotal role in data science, providing a wide range of functionality for data manipulation, analysis, visualization, and machine learning. By embracing these powerful libraries and fostering a culture of continuous learning and exploration, data scientists can thrive in their pursuit of data-driven insights and innovation.